My takes on Dwarkesh's chip questions

If you like a podcast enough you can just invite yourself!

I thoroughly enjoyed listening to the most recent Dwarkesh podcast episode, where he interviewed Jon Y from Asianometry and Dylan Patel from SemiAnalysis. I learned a lot!

I thought I would give my own take to some of the questions he asked, having the benefit of graphs, links, and being able to Google something. Of course, I do not mean to say my takes are better than Dylan or Jon’s. Indeed a huge chunk of what I know I owe to Asianometry’s videos and SemiAnalysis’ posts.

This is going to be less tight and more off the cuff than the average post I want to make here, but what is the point of a blog/newsletter if I can not have fun with it. Let’s go.

Dwarkesh Patel

Why isn't there more vertical integration in the semiconductor industry? Why is it like, “This subcomponent requires this subcomponent from this other company, which requires this subcomponent from this other company…” Why isn’t more of it done in-house?

My take:

I agree with Jon and Dylan in terms of the stratification. But there is another way to look at it, from a more historical POV.

In the 80’s, AMD’s CEO said: “Real men have fabs”. While AMD would spin out their foundry business later, the idea at the time was that if you wanted to be a real semiconductor company, you made your own chips. Chip startups were building their own fabs. Things were vertically integrated!

At this same time, TSMC was a solution looking for a problem. It just got started and there was no market for a pure foundry play. But Morris Chang, the founder of TSMC, believed that there will be companies who were gonna go fabless and require his services. Here’s what he said:

"When I was at TI and General Instruments I saw a lot of integrated circuit designers wanting to leave and set up their own business, but the one thing or the biggest thing that stopped them from leaving those companies was they couldn't raise enough money to form their own company. Because at the time, it was thought that every company needed manufacturing, needed their wafer manufacturing, and the most capital intensive part of a semiconductor company, of an IC company (does the manufacturing). I saw those people wanting to leave, but being stopped by the lack of ability to raise a lot of money and build a wafer fab."

This core insight was correct. TSMC started off by manufacturing the least profitable parts for Intel and other players who had foundries1. As time went on, existing as a pure foundry enabled a whole ecosystem of designers to leave these large companies and create startups, which were then fabbed at TSMC.

Then a sort of flywheel occurs: they enable fabless companies, and the fabless market grows. The market grows, more players enter, and they all go to TSMC for manufacturing2. It was just cheaper and better.

I suspect then what happened for the fabrication tools were things like what Jon described, where dedicating a company to make deposition / lithography tools was way better than what you were going to make in house.

Much of this info comes from the excellent TSMC episode by Acquired.

Dwarkesh Patel

How big is the difference between 7nm to 5nm to 3nm? Is it a huge deal in terms of who can build the biggest cluster?

My take:

I agree with Dylan that if we froze at the same process node you could still get architecture gains. As a rough estimate, only 40% of an H100’s flops are utilized for training. I think it is very plausible we come up with architecture advancements that increase the percentage utilization, without moving process nodes.

Another part of this is that Moore’s Law gives you some progress, but having more transistors enables different architectures, so that also gives you more progress. Quantifiably, though, I am not sure. However, when it comes to large clusters, the systems design (e.g. how easily chips are networked) and overall engineering matters more than single chip architectures. See Dylan’s piece here, and mine, here.

Dwarkesh Patel: Suppose Taiwan is invaded or Taiwan has an earthquake. Nothing is shipped out of Taiwan from now on. What happens next? How would the rest of the world feel its impact a day in, a week, a month in, a year in?

My take:

The discussion quickly pointed to older nodes, and I think that is key. Almost anything you interact with, outside your phone and your laptop, uses an older node— probably above 28 nm.

TSMC has already paid off the cost of the equipment on the older fabs, and the machines generally last a long time, so they are kept running even if they are old. This means most of the older fabs still push a lot of volume. Older nodes are important from an infrastructure point of view, and it is one thing the CHIPS Act has generally overlooked so far. What use is a GPU if you can not run fridges, cars, radios, light bulbs, airplanes, and anything that requires a chip. This has a lot of national security implications.

Stronger funding for older node fabs is an overlooked focus area for US policymakers in my opinion.

Dwarkesh Patel

[…]In this field, I'm curious about how one learns the layers of the stack. It's not just papers online. You can't just look up a tutorial on how the transformer works. It's many layers of really difficult shit.

My Take:

I agree 100% and it is extremely difficult. I think one part of it is that practically, the semiconductor industry is 20 different industries in a trench coat. There is a lot of physics, chemistry, optics, computer engineering, theory, mechanical engineering, software engineering, etc. To do anything, from a technical point of view at a manufacturing company, you probably need a PhD.

Even from a chip design perspective, you generally need access to software that is extremely expensive. Most people do not design a chip until grad school. I recommend reading Zach’s blog here to get a better sense of how sort of need a good amount of schooling to do chip design, unlike software engineering.

I do not know a lot, but having a degree that focused on semiconductor manufacturing helped me understand the different parts involved. Interning at a fab also helped a lot. I struggled a lot initially with wrapping my head around the different parts and just ended up grilling people at lunch to understand.

Even now, I sometimes have to read SemiAnalysis posts 2-3 times to really get what is going on.

When I meet people who really know what they are talking about and understand a lot of (not all!) the layers, they are people who think about this industry day and night and talk to people all the time. Asking people questions, engaging with different parts of the industry, compiling notes, etc.

Remember, even ASML ships a huge team of people to TSMC to install the EUV machines. And TSMC technicians are no slouches!

The comparison with AI is apt: I think the complexity can be demonstrated by how many people it takes to make a chip, compared to how many people it takes to make a foundation model.

It probably takes on the order of tens of thousands to make a chip like a GPU, especially if you consider design. An engineer at Google told me it probably takes a hundred people to make a foundation model.

Both of these estimates may be off, but I think it drives the point about how different in complexity and size the fields are.

Dwarkesh Patel

If that’s the case and nobody knows the whole stack, how does the industry coordinate? “In two years we want to go to the next process which has gate all-around and for that we need X tools and X technologies developed…”

My take:

There is definitely some truth to the idea that things are argued out, and of course much of the knowledge or intuition to know what to do next is only developed after 30 years of experience or something. Jon and Dylan cover that well.

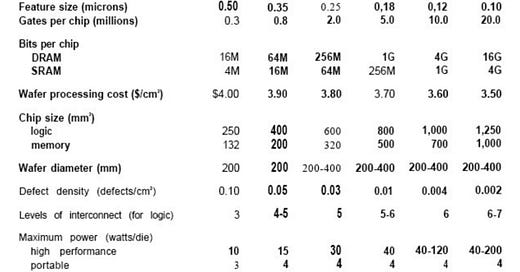

I think one other part is that, formally, it looks like the “International Technology Roadmap for Semiconductors”, which was a set of documents coordinated and produced by industry experts. The idea was to coordinate timelines and expectations of tools performance. Looks something like this:

The current roadmap is the “International Roadmap for Devices and Systems” and this is the Wikipedia.

Now I am unsure to what extent that impacts something as specific as GPU production, but I do think it plays a large part in how the industry formalizes a lot of these conversations and conclusions of disagreements. I expect the rush to meet the demand in AI has somewhat accelerated timelines, but I am not sure by how much.

My take on AI in chip design:

Dylan mentioned RL techniques being used to design chips, and I think it is worth spending some time on this. Probably just after the episode was recorded, Google released AlphaChip.

It is completely open source. They have been using it for the last three generations of TPUs, Google’s in house AI chip, which I covered in my first post. While it is unclear exactly how many hours or dollars this has saved, it seems like it is getting better and better across different generations. At their volumes, it is probably a non-trivial amount.

This is something worth keeping an eye on. I expect Google to continue to lead in AI in chip design.

Dwarkesh Patel

There are two narratives you can tell here of how this happens. One is that these AI companies training the foundation models understand the trade-offs of. How much is the marginal increase in compute versus memory worth to them? What trade-offs do they want between different kinds of memory? They understand this, so the accelerators they build can make these trade-offs in a way that's most optimal. They can also design the architecture of the model itself in a way that reflects the hardware trade-offs.

Another is NVIDIA. I don’t know how this works. Presumably, they have some sort of know-how. They're accumulating all this knowledge about how to better design this architecture and also better search tools. Who has the better moat here? Will NVIDIA keep getting better at design, getting this 100x improvement? Or will it be OpenAI and Microsoft and Amazon and Anthropic who are designing their accelerators and will keep getting better at designing the accelerator?

My take:

I do not understand enough computer architecture to give a great answer, but there are a couple of things I can comment on. TPUs have less memory than GPUs, but because of their microarchitecture, they also need less of it, so maybe it does not matter as much. My understanding is that most of the energy in H100s is spent moving data around and higher data bandwidths would be strongly welcomed. There is also one type of memory all of these accelerators use, which is HBM, so this basically boils down to improvements in HBM.

Another part which people underrate is Nvidia’s CUDA ecosystem, which is very defensible. It is interesting AMD is not among those names you mention, despite having great chips on paper, and my understanding is because their software ecosystem is much worse than Nvidia’s.

I would be curious to see how Amazon or Microsoft design their chips. Do they make them CUDA compatible somehow? I doubt Nvidia lets them, but who knows, they may develop an internal ecosystem good enough for them, like Google has. I touched on these topics here.

Perhaps another part is that even if someone comes out with a great chip that has infinite memory and infinite compute, it could still not work. At the scale of hundreds of thousands of chips, as SemiAnalysis points out, niche things like fault tolerance start to matter a lot.

Dwarkesh Patel [to Dylan]

Wow. Okay you’re saying 1e30 you said by 2028-29. That is literally six orders of magnitude. That's like 100,000x more compute than GPT-4.

My take:

This is more than what Epoch estimated in a recent post. Maybe the truth is somewhere in between, I am not sure.

Taiwan as a nation was also relatively weak in terms of IC design and R&D, but they were good at manufacturing. The government actually subsidized half the cost of starting TSMC!

TSMC started as a government project! Morris was a government employee who had 0% equity, and only became a billionaire through buying TSMC shares and then holding them.

One such fabless company, started in 1993, was Nvidia.